(Making a table...) |

(Syntax highlighting what? - Refined Setup details) |

||

| (7 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

[[File:NVIDIA - Hopper Architectures, Internals.png|thumb|The internals of an NVIDIA H100 Unit<ref>https://developer.nvidia.com/blog/nvidia-hopper-architecture-in-depth/</ref>]] | [[File:NVIDIA - Hopper Architectures, Internals.png|thumb|The internals of an NVIDIA H100 Unit<ref>https://developer.nvidia.com/blog/nvidia-hopper-architecture-in-depth/</ref>]] | ||

Due to the fact that Linux runs 91.5% of supercomputers and is used frequently for professional tasks,<ref>Elad, Barry. ''Linux Statistics 2024 by Market Share, Usage Data, Number Of Users and Facts.[https://www.enterpriseappstoday.com/stats/linux-statistics.html]''</ref> '''Artificial Intelligence''' ( | Due to the fact that Linux runs 91.5% of supercomputers and is used frequently for professional tasks,<ref>Elad, Barry. ''Linux Statistics 2024 by Market Share, Usage Data, Number Of Users and Facts.[https://www.enterpriseappstoday.com/stats/linux-statistics.html]''</ref> '''Artificial Intelligence''' (AI) is used widely on [[Linux]] for not only proprietary applications and usage, but also is heavily used for [[FOSS / FLOSS]] applications of such technologies. Such examples include voice changers, image generators, '''Large Language Models''' (LLMs), and much more. If you are feeling frustrated or confused, this is a guide on how to not only yield results on '''NVIDIA''' cards - commonly used in the AI industry{{Citation needed}} - but also on '''Advanced Micro Devices'''' (AMD) '''[https://www.amd.com/en/products/software/rocm.html ROCm]-capable Graphics Processing Units''' (GPU). Both drivers have the capability to run AI applications, yet also depends on the developers' and project's support for such technologies. | ||

* Keep in mind the philosophy of true freedom on the internet and digitally | * Keep in mind the philosophy of true freedom on the internet and digitally{{Citation needed}} - exercise your freedoms as you see fit using this technology at your own whims, but practice good ethics. | ||

== | == Ana/Miniconda Setup == | ||

... | ... (tomorrow's problem) | ||

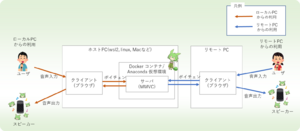

== w-okada's voice changer == | == w-okada's voice changer == | ||

[[File:W-okada voice-changer - Diagram.png|thumb|A diagram of how w-okada functions at the high-level. <ref>https://github.com/w-okada/voice-changer</ref>|left]] | [[File:W-okada voice-changer - Diagram.png|thumb|A diagram of how w-okada functions at the high-level. <ref>https://github.com/w-okada/voice-changer</ref>|left]] | ||

According to w-okada's documentation in English, and as implied by the name of the repository, it is an AI-powered "voice changer" tool.<ref>GitHub, ''voice-changer''[https://github.com/w-okada/voice-changer]</ref> There are numerous uses for this: for simple enjoyment, for music covers | According to w-okada's documentation in English, and as implied by the name of the repository, it is an AI-powered "voice changer" tool.<ref>GitHub, ''voice-changer''[https://github.com/w-okada/voice-changer]</ref> There are numerous uses for this: for simple enjoyment, for music covers, and for anonymity, w-okada as a voice changer is an extremely useful utility. | ||

==== NVIDIA setup ==== | ==== NVIDIA setup ==== | ||

Setup for NVIDIA cards is particularly straightforward, especially on [[Arch Linux]] where drivers are equally as abundant as they are functionally sufficient for this application. | Setup for NVIDIA cards is particularly straightforward, especially on [[Arch Linux]] where drivers are equally as abundant as they are functionally sufficient for this application. If you are considering investing in or using an existing NVIDIA card: | ||

{| class="wikitable mw-collapsible" | {| class="wikitable mw-collapsible" | ||

|+Functionality and Post Setup | |+Functionality and Post Setup | ||

!4 GB VRAM cards or '''LESS''' | !4 GB VRAM cards or '''LESS''' | ||

!You will likely struggle with using w-okada at times below 4 GB (2 GB, 1 GB, etc.) in hearing glitches and a lot of slowness in transmission of your voice - input - and the new voice - output. | !''<u>You will likely struggle with using w-okada at times below 4 GB (2 GB, 1 GB, etc.) in hearing glitches and a lot of slowness in transmission of your voice - input - and the new voice - output.</u>'' | ||

* 1 GB - Likely entirely unusable or extremely slow. Untested. | * 1 GB - Likely entirely unusable or extremely slow. Untested. | ||

* 2 GB - Slow and not useful for real-time computation, may have better usage as a [[Secondary GPU|Secondary/Dual GPU]] (potential guide here) for other applications or usage where such processing is not needed in a real-time scenario. Untested. | * 2 GB - Slow and not useful for real-time computation, may have better usage as a [[Secondary GPU|Secondary/Dual GPU]] (potential guide here) for other applications or usage where such processing is not needed in a real-time scenario. Untested. | ||

* 4 GB - Very slow and buggy when used as your primary, sole Dedicated GPU. However, you '''will likely have an ideal experience''' when using '''Dual GPUs''' and can reach up to or around 64-48 Chunk Size. '''TESTED (Arch Linux, nvidia/nvidia-dkms on 32 GB RAM and an NVIDIA GTX 1050 Ti GPU with an AMD 5600X Processor - will verify).''' | * 4 GB - Very slow and buggy when used as your primary, sole Dedicated GPU. However, you '''will likely have an ideal experience''' when using '''Dual GPUs''' and can reach up to or around 64-48 Chunk Size. '''TESTED (Arch Linux, nvidia/nvidia-dkms on 32 GB RAM and an NVIDIA GTX 1050 Ti GPU with an AMD 5600X Processor - will verify).''' | ||

|- | |- | ||

!'''8 GB VRAM EXACT''' | |||

!More than often, 8 GB as an '''extra or dual GPU''' is sufficient in order to get satisfactory results at reasonable speeds (100-250 ms, average). ('''SEMI-TESTED''') | |||

* '''DUAL -''' In this case, '''you must make sure that, for dual NVIDIA setups, you reinstall your drivers if you are using a brand-new card!''' This is to prevent extreme overheating and fan noise. Otherwise, there should be no further concerns. | |||

* '''SINGLE -''' On non-GPU intensive applications, response time should lean towards the latter half of usual speeds. However, it will have comparable experience to lower end cards on more demanding games. '''Partition your resources.''' | |||

|- | |- | ||

!'''12 GB or MORE''' | |||

!Considering the general trend of Artificial Intelligence, '''12 GB of VRAM is ideal.''' ('''TESTED - GIGABYTE GeForce RTX 3060 12 GB''') | |||

'''12 GB''' of VRAM should handle heavier workloads and other programs simultaneously well, and beyond is sufficient for best performance. | |||

* It may be redundant to have such a large amount of VRAM for this task, however, if it is a '''dual GPU -''' considering cost, it is likely better to stay with an 8 GB model. | |||

|} | |} | ||

NVIDIA cards generally seem to get the same performance as they do on Windows for voicechanger users. | |||

=== | === Setup === | ||

... | Clone the voicechanger repository:<syntaxhighlight lang="bash"> | ||

git clone https://github.com/w-okada/voice-changer | |||

</syntaxhighlight>'''(Using Conda):''' Assuming you are in a blank ''base'' environment, create a new environment running Python 3.10.6, ideally ('''this is because of compatibility with w-okada'''): <syntaxhighlight lang="bash"> | |||

conda create --name voicechanger --python=3.10.6 | |||

</syntaxhighlight>'''Make sure you have installed NVIDIA drivers for your specific distribution. Consult their respective wiki pages for more info.''' | |||

At this point, you want to activate your voicechanger environment:<syntaxhighlight lang="bash"> | |||

conda activate voicechanger | |||

</syntaxhighlight>...and cd into the '''server''' directory, assuming you are in the directory of which you have cloned the repository into:<syntaxhighlight lang="bash"> | |||

cd voice-changer/server | |||

</syntaxhighlight>Your first run will require you to install the requirements file:<syntaxhighlight lang="bash"> | |||

pip install -r requirements.txt | |||

</syntaxhighlight>After installing, run MMVCServerSIO.py:<syntaxhighlight lang="bash"> | |||

python3 MMVCServerSIO.py | |||

</syntaxhighlight> | |||

== Stable Diffusion Setup - WebUI == | == Stable Diffusion Setup - WebUI == | ||

According to the project's main page, the Stable Diffusion WebUI is a "web interface for Stable Diffusion" in order to generate images using prompts or image-to-image generation.<ref>AUTOMATIC1111, ''stable-diffusion-webui''. Main Page[https://github.com/AUTOMATIC1111/stable-diffusion-webui]</ref> | According to the project's main page, the Stable Diffusion WebUI is a "web interface for Stable Diffusion" in order to generate images using prompts or image-to-image generation.<ref>AUTOMATIC1111, ''stable-diffusion-webui''. Main Page[https://github.com/AUTOMATIC1111/stable-diffusion-webui]</ref> | ||

Latest revision as of 04:10, 17 July 2024

Due to the fact that Linux runs 91.5% of supercomputers and is used frequently for professional tasks,[2] Artificial Intelligence (AI) is used widely on Linux for not only proprietary applications and usage, but also is heavily used for FOSS / FLOSS applications of such technologies. Such examples include voice changers, image generators, Large Language Models (LLMs), and much more. If you are feeling frustrated or confused, this is a guide on how to not only yield results on NVIDIA cards - commonly used in the AI industry [citation needed] - but also on Advanced Micro Devices' (AMD) ROCm-capable Graphics Processing Units (GPU). Both drivers have the capability to run AI applications, yet also depends on the developers' and project's support for such technologies.

- Keep in mind the philosophy of true freedom on the internet and digitally

[citation needed] - exercise your freedoms as you see fit using this technology at your own whims, but practice good ethics.

Ana/Miniconda Setup

... (tomorrow's problem)

w-okada's voice changer

According to w-okada's documentation in English, and as implied by the name of the repository, it is an AI-powered "voice changer" tool.[4] There are numerous uses for this: for simple enjoyment, for music covers, and for anonymity, w-okada as a voice changer is an extremely useful utility.

NVIDIA setup

Setup for NVIDIA cards is particularly straightforward, especially on Arch Linux where drivers are equally as abundant as they are functionally sufficient for this application. If you are considering investing in or using an existing NVIDIA card:

| 4 GB VRAM cards or LESS | You will likely struggle with using w-okada at times below 4 GB (2 GB, 1 GB, etc.) in hearing glitches and a lot of slowness in transmission of your voice - input - and the new voice - output.

|

|---|---|

| 8 GB VRAM EXACT | More than often, 8 GB as an extra or dual GPU is sufficient in order to get satisfactory results at reasonable speeds (100-250 ms, average). (SEMI-TESTED)

|

| 12 GB or MORE | Considering the general trend of Artificial Intelligence, 12 GB of VRAM is ideal. (TESTED - GIGABYTE GeForce RTX 3060 12 GB)

12 GB of VRAM should handle heavier workloads and other programs simultaneously well, and beyond is sufficient for best performance.

|

NVIDIA cards generally seem to get the same performance as they do on Windows for voicechanger users.

Setup

Clone the voicechanger repository:

git clone https://github.com/w-okada/voice-changer

(Using Conda): Assuming you are in a blank base environment, create a new environment running Python 3.10.6, ideally (this is because of compatibility with w-okada):

conda create --name voicechanger --python=3.10.6

Make sure you have installed NVIDIA drivers for your specific distribution. Consult their respective wiki pages for more info. At this point, you want to activate your voicechanger environment:

conda activate voicechanger

...and cd into the server directory, assuming you are in the directory of which you have cloned the repository into:

cd voice-changer/server

Your first run will require you to install the requirements file:

pip install -r requirements.txt

After installing, run MMVCServerSIO.py:

python3 MMVCServerSIO.py

Stable Diffusion Setup - WebUI

According to the project's main page, the Stable Diffusion WebUI is a "web interface for Stable Diffusion" in order to generate images using prompts or image-to-image generation.[5]

- ↑ https://developer.nvidia.com/blog/nvidia-hopper-architecture-in-depth/

- ↑ Elad, Barry. Linux Statistics 2024 by Market Share, Usage Data, Number Of Users and Facts.[1]

- ↑ https://github.com/w-okada/voice-changer

- ↑ GitHub, voice-changer[2]

- ↑ AUTOMATIC1111, stable-diffusion-webui. Main Page[3]